NVIDIA announced new products and initiatives to help meet the enormous processing demands of generative AI and large language models at Computex 2023 in Taiwan, running through June 2. The acronym AI has been uttered more in one day than in the entire show last year, as nearly all major tech companies are racing to gain a foothold in the explosive deep learning and AI market. NVIDIA is currently one of the dominant players due to its position as a leading GPU designer, releasing products for acceleration of deep learning applications as early as 2016.

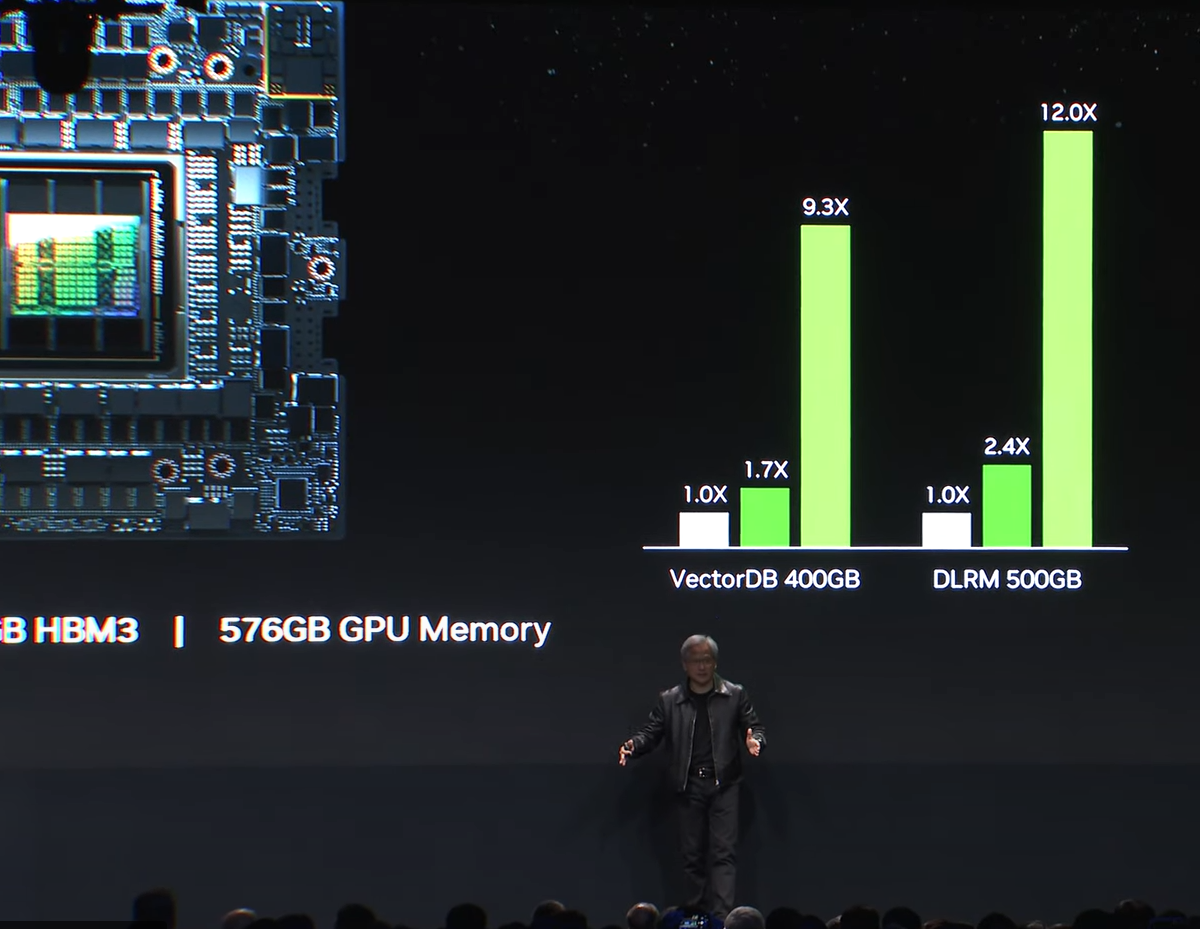

To kick off a series of releases, NVIDIA announced that its GH200 Grace Hopper Superchip, which combines its Arm-based Grace CPU and Hopper GPU architecture bridged with NVIDIA’s NVLink-C2C interconnect, has entered full production. The new interconnect allows for 900 gigabytes per second of total bandwidth, 7x higher than PCIe Gen5 lanes found in most accelerated systems, with 30x higher aggregate system memory bandwidth compared to its DGX A100 system.

“Generative AI is rapidly transforming businesses, unlocking new opportunities and accelerating discovery in healthcare, finance, business services, and many more industries,” says Ian Buck, vice president, accelerated computing, NVIDIA. “With Grace Hopper Superchips in full production, manufacturers worldwide will soon provide the accelerated infrastructure enterprises need to build and deploy generative AI applications that leverage their unique proprietary data.”

Wasting no time after announcing Grace Hopper’s full production, NVIDIA’s next announcement told the world what it’s doing with it—a new class of large memory AI supercomputer, dubbed the DGX GH200. This new monster system combines 256 Grace Hopper Superchips, sharing memory space with its NVLink interconnect and NVLink Switch System, allowing it to function as a single GPU offering 1 exaflop of performance and 144 terabytes of shared memory. That equates to 500x more memory than a single DGX A100 system, and 10x more bandwidth than the previous generation. With the latest generation of leading-edge generative AI models often exceeding the memory space of an entire system, the DGX GH200 will be very attractive to those seeking to run these massive workloads.

NVIDIA didn’t disclose pricing or an exact shipping date, noting only that it should be available by the end of 2023. It did state that its hyperscale partners would have access to it first, calling out Google, Meta, and Facebook specifically, to explore its capabilities for generative AI workloads. It also intends to provide the design to cloud service providers and other hyperscalers looking to customize it for their infrastructure.

With more industries and data centers looking to leverage large language models, AI inference, large data models, and digital twins, etc., more are looking to design and customize their own solutions for acceleration. “The challenge to creating a new server for an enterprise or cloud data center is time and money,” says Buck. “Upwards of millions of dollars and can take as long as 18 months to develop.”

Therefore, NVIDIA unveiled a new server specification, NVIDIA MGX, which provides system manufacturers a modular reference architecture to “quickly and cost-effectively build more than 100 server variations to suit a wide range of AI, high-performance computing, and Omniverse applications.”

Manufacturers start with a basic system architecture for a given chassis, and then select the GPU, DPU, and CPU. Its modular design gives manufacturers the ability to customize each system for customers that may have specific requirements around budget, power delivery, and thermals. Differing from HGX, MGX offers multigenerational compatibility with NVIDIA products so system builders can reuse existing designs as next-generation products emerge. Says Buck, “With the new MGX reference architecture, we see the possibility to create new designs in as little as two months, at a fraction of the cost.”

NVIDIA’s solutions for accelerating generative AI in the cloud doesn’t stop in the server. The company announced Spectrum-X, a new high-performance Ethernet networking platform designed to improve performance and efficiency of Ethernet-based AI clouds.

Spectrum-X is built on a “tight coupling” of the new NVIDIA Spectrum-4 Ethernet Switch and the NVIDIA BlueField-3 DPU to create an end-to-end 400GbE network optimized for AI clouds. This combination, according to NVIDIA, provides 1.7x better overall AI performance and power efficiency, as well as more consistent performance in multitenant environments when compared to traditional Ethernet. It’s designed to scale as well, starting with 256 200Gb/s ports connected by a single switch, or 16,000 ports in a two-tier leaf spine topology.

Hardware wasn’t its only area of focus. NVIDIA shared details on how it is looking to digitize the world’s largest industries. NVIDIA Founder and CEO Jensen Huang took the stage showcasing some examples on how manufacturers are using its Omniverse platform to advance their industrial digitalization efforts.

“The world’s largest industries make physical things. Building them digitally first can save enormous costs,” says Huang. “NVIDIA makes it easy for electronics makers to build and operate virtual factories, digitalize their manufacturing and inspection workflows, and greatly improve quality and safety while reducing costly last-minute surprises and delays.”

At the platform level, Omniverse connects the world’s leading 3D simulation and generative AI providers. The open development platform, for example, lets teams build interoperability between their favorite applications—such as those from Adobe, Autodesk, and Siemens. It can be used to create digital twins of production lines or workflows, run simulations, testing, and more. The company also recently announced NVIDIA Omniverse Cloud, a platform-as-a-service now available on Microsoft Azure, which gives enterprise customers access to the full-stack suite of Omniverse software applications.

Finally, NVIDIA announced ACE for Games, which builds on the Omniverse’s avatar cloud engine (hence ACE for Games). It’s a custom model building service that uses generative AI to enable intelligent game characters capable of natural language interaction. Historically, interaction with game characters has generally been limited to scripted, canned responses to known inputs.

NVIDIA believes that middleware developers can leverage NVIDIA’s technologies to allow players to speak to these in-game characters directly, and have the characters return realistic, natural responses. To function properly, the language models will have to be tailored for each game and character, otherwise the responses returned may not fit within the context of the game, but that could be challenging for developers to script deep narratives without knowing exactly what dialogue the player could see. ACE modules will be deployable across GeForce PC and cloud sometime in the future, though no specific date was announced. Interested developers are encouraged to sign up for its early access program.

NVIDIA isn’t alone in AI related announcements at Computex. Intel also had some new tech to show off, sharing its vision for client-side AI with Meteor Lake’s VPU for AI acceleration in its upcoming 14th Generation Mobile Core Processors.