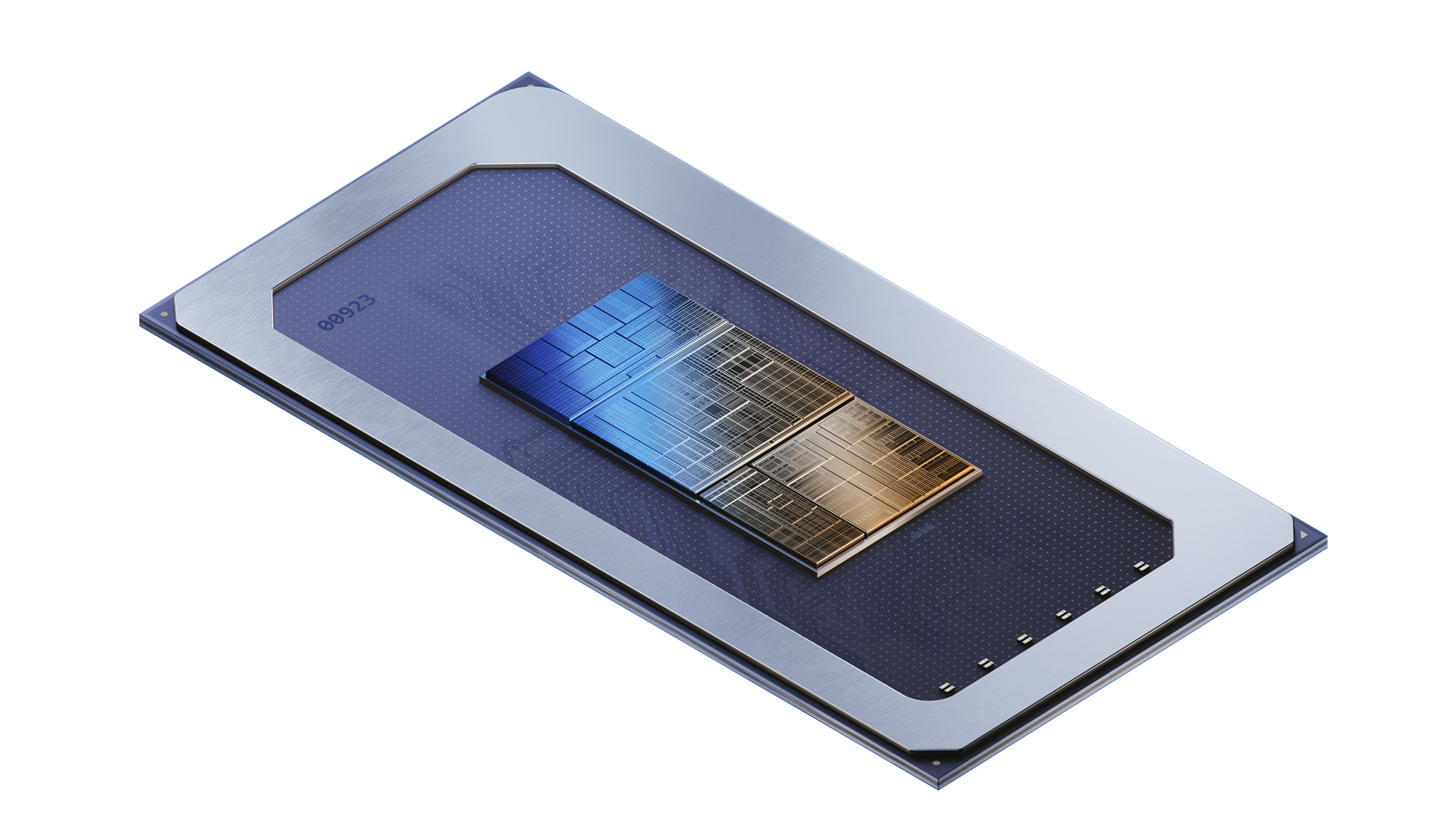

Intel detailed its client-side solution for AI hardware processing in its upcoming 14th generation Core mobile processors, code-named Meteor Lake, at Computex 2023 in Taiwan, running through June 2. Since the public launch of ChatGPT the amount of interest in large language models and generative AI, and more importantly what it can do (ranging from create unique images from a description to helping MSPs drive business efficiencies), has reached fervor levels in the tech industry.

While much of what is causing the AI frenzy is powered by massive data centers, the client is doing a lot more “AI” powered experiences today than end users may realize. For example, background removal and video/audio noise suppression are both inference workloads that could benefit from dedicated hardware to perform better or reduce power consumption. As such, Intel is building in what’s essentially a third processor type to its SoCs, the neural VPU (derived from Intel owned Movidius and its third-generation Myriad X Vision Processing Unit, which this processor is based on), to accelerate or more efficiently perform existing client AI workloads and give developers the option to move some cloud-oriented workloads closer to the edge.

Intel didn’t disclose much of the technical details surrounding the upcoming VPU, like how much real estate it will occupy on chip or any performance figures. Meteor Lake is still several months away from launch. It did share that the three different nodes on the chip will work together, and not always mutually exclusive, depending on the workload. The CPU is best for light-weight, single-inference low-latency tasks, while high-performance media needs are best suited for the GPU. The VPU stands between the two, best used for offloading sustained AI workloads that benefit from the lower power consumption of the VPU.

Power consumption is the key benefit in the immediate future and the primary reason Intel is focused on bringing its neural VPU to mobile SKUs first, at least until software developers learn new ways to leverage the hardware. No details were shared about its desktop SKUs, but odds are Intel will look to add AI accelerators to some, if not all, of its desktop SKUs over time.

Leveraging the new hardware means that the developer community needs OS support and tools. On that front, Intel is partnering with Microsoft to scale Meteor Lake across the ecosystem with its respective ISV partners. “We’re excited to collaborate on AI with Intel with the scale Meteor Lake will bring to the Windows PC ecosystem,” says Pavan Davuluri, corporate vice president, Windows Silicon and System Integration at Microsoft.

In addition to ONNYX runtime support enabled through OpenVino-EP and DirectML-EP, developers can leverage more effective machine learning on WinML/DirectML for acceleration of neural VPU and GPU, as well as new Microsoft Studio Effects including background blur, eye automatic-framing, and voice focus.

Intel isn’t alone integrating AI acceleration into its SoCs. Over the weekend at Computex, Nvidia showcased several new solutions and initiatives to power AI in the cloud, while earlier this month, AMD introduced its Ryzen AI technology that will be integrated into its upcoming Ryzen 7040U series ultraportable processors. Just how the two technologies compare will unfold as products hit the market, and whether or not AI workloads transform the PC experience in the way both are hoping.